Large language models (LLMs) are a type of artificial intelligence (AI) that have been trained on massive datasets of text and code. They can be used for a variety of tasks, such as natural language processing, machine translation, and code generation. However, LLMs are also very computationally expensive to train and deploy.

LLMOps stands for Large Language Model Operations. It is a subfield of MLOps that focuses on the operational capabilities and infrastructure required to fine-tune existing foundational models and deploy these refined models as part of a product.

Let's start with MLOps

MLOps stands for Machine Learning Operations. It is a set of practices that combines Machine Learning (ML) and DevOps to automate the processes of deploying, monitoring, and managing ML models in production. MLOps is important because it helps to ensure that ML models are reliable, scalable, and secure.

LLMOps is related to MLOps because both fields focus on the operational aspects of machine learning. However, LLMOps have some additional challenges that MLOps does not, such as the need to manage large amounts of data and the need to ensure that LLMs are used safely and responsibly.

LLMOps Challenges

Some of the key challenges that LLMOps teams face include:

- Data management: LLMs require large amounts of data to train. This data can be expensive to acquire and curate. Fine-tuning requires much less data but in an enterprise setting, data governance is an important factor.

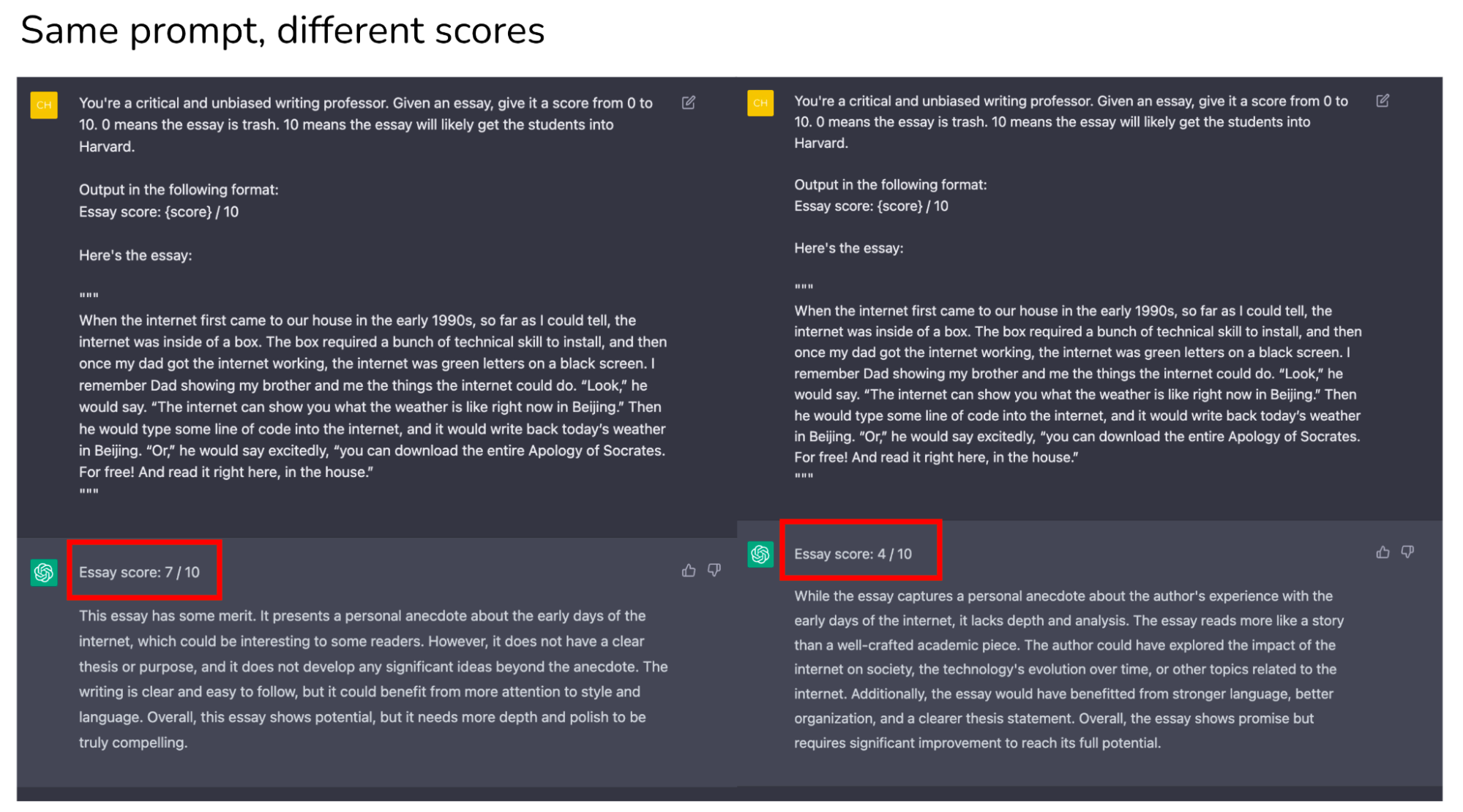

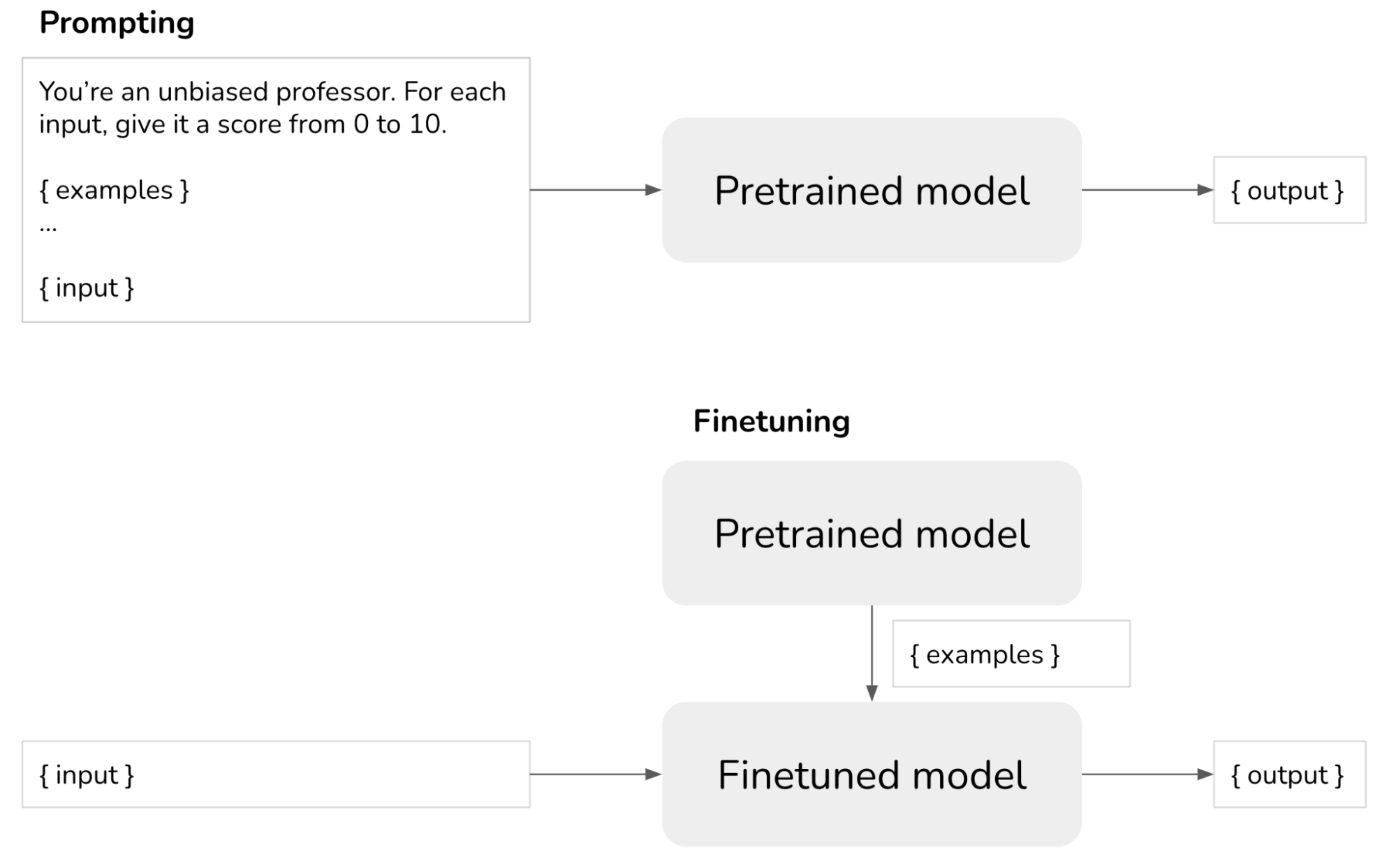

- Model development: LLMs are complex models that can be difficult to train, prompt and fine-tune. This requires expertise in machine learning and natural language processing.

- Deployment: LLMs are computationally expensive to deploy. This requires access to high-performance computing resources. Most of the cost of LLMOps will come in inference, and most of the cloud providers charge by token your input and output.

- Ethics: LLMs can be used to generate harmful content, such as hate speech and misinformation. LLMOps teams need to be aware of these risks and take steps to mitigate them.

LLMOps Solutions

There are a number of tools and services that can help LLMOps teams address these challenges. These include:

- Data management tools: These tools can help LLMOps teams acquire, curate, and store data efficiently and effectively.

- Model development tools: These tools can help LLMOps teams design, train, and fine-tune LLMs. There are several tools in the market and I'll review them all soon.

- Deployment tools: These tools can help LLMOps teams deploy LLMs to production efficiently and effectively. A good example is the new AWS Inferentia 2, recently announced and purpose-built for deep learning (DL) inference.

- Ethics tools: These tools can help LLMOps teams identify and mitigate the risks of harmful content. A good example of ethics tools includes IBM Watson Openscale. Nvidia released yesterday NeMo-Guardrails, an open-source toolkit for easily adding programmable guardrails to LLM-based conversational systems.

New LLMOps products recently announced

Exciting new solutions are coming to the market to support the LLM production lifecycle.

The new LLM debugging tools aim to assist ML practitioners in prompt engineering for LLMs by providing Trace Timeline and Trace Table features. It enables users to review past results, debug errors, and share insights. Model Architecture offers a detailed view of chain components, while W&B Launch allows for easy execution of evaluations from OpenAI Evals.

MLflow 2.3, the latest update to the open-source machine learning platform, introduces enhanced support for managing and deploying large language models (LLMs). New features include three new model flavors: Hugging Face Transformers, OpenAI functions, and LangChain, along with improved model download and upload speeds to/from cloud services. The Hugging Face Transformers integration enables easy access to over 170,000 models from the Hugging Face Hub, supporting native logging and loading of transformers pipelines and models. Additionally, the platform performs automatic validations and fetches Model Card data when logging components or pipelines, as well as offering a model signature inference feature for easier deployment.

Excited to partner with @LangChainAI and launch our new LLMops product! https://t.co/VdyB9cxjrn

— Comet (@Cometml) April 19, 2023

A summary of the LLMOps goodies that we just announced:

— Weights & Biases (@weights_biases) April 20, 2023

1st up: W&B Prompts https://t.co/4cFpiEg9JY pic.twitter.com/qm03qgsGFL

MLflow just added first-class support for LLMs, including integrations with @huggingface transformers/pipelines, @OpenAI and @LangChainAI! Open source #LLMOps is here. https://t.co/l870bYyPdE

— Matei Zaharia (@matei_zaharia) April 18, 2023

Get everyone ready for LLMOps in your business!

LLMOps is a rapidly growing field. As LLMs become more powerful and widely adopted, the need for LLMOps teams will also grow. By using the tools and services available, LLMOps teams can help ensure that LLMs are used safely and responsibly.

Right now we are living in a third AI window: LLMs/generative AI. Nobody is an expert, nobody has a decade of experience in LLMOps. Nobody has 10,000 hours experience writing prompts. The barriers to entry are super low. The window is wide open. BUT THIS WON'T ALWAYS BE TRUE.